While this can be accomplished with Thin Provisioning, it's not necessarily the point of Thin provisioning. Thin Provisioning is also about intelligent allocation of existing physical capacity rather than over-allocation. If I have purchased 1TB of storage, and I know only a portion of it will be used initially, then I could thin provision LUNs totaling 1TB while on the back-end I do the physical allocation on application writes. There's no overallocation in this scheme and furthermore, I have the ability to re-purpose unallocated capacity if need be.

The big problem with storage allocation is that it's directly related to forecasting which is risky, at best. In carving up storage capacity too much maybe given to one group and not enough to another. The issue here is that storage re-allocation is difficult, it takes time, resources and in most cases, it requires application downtime. That's why most users request more capacity than they would typically need on day one. Thus capacity utilization becomes an issue.

Back to the overallocation scheme. In order to do overallocation you have to have 2 things in place to address the inherent risk associated with such practice and avoid getting calls at 3am.

1) A robust monitoring and alerting mechanism

2) Automated Policy Space Management

Without these Thinly provisioning represents a serious risk and and requires constant monitoring. That's why with DataONTAP 7.1 we have extended monitoring and alerting within the Operations Manager to include thinly provisioned volumes and also introduced automated Policy Space Management (vol autosize and snap autodelete).

Another thing I've just read is that when thin provisioning a windows lun, format will trigger physical allocation equal to the size of the LUN. That's not accurate and to prove that point I have created a 200MB Netapp Volume.

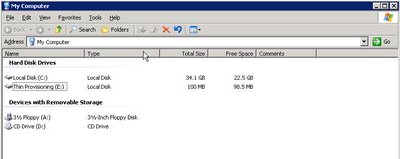

Furthermore, inside that Volume I have created a Thinly provisioned LUN (100MB) and mapped it to a windows server and formatted it. It's worth noting that the "Used" column of the Volume that hosts this particular LUN is 3MB, depicting overhead after the format, however, the LUN itself (/vol/test/mylun), as shown in the picture, is 100MB. Below is the LUN view from the server's perspective and further proof that the LUN is indeed formatted, (Drive E:\).

Personaly, I would not implement Thin Provisioning for new apps for which I have no usage patterns at all. I would also not implement it for applications that quickly delete chunks of data within the LUN(s) and write new data. Whenever you delete data on the host from a LUN, the disk array doesn’t know the data has been deleted. The host basically doesn’t tell - or rather SCSI doesn’t have a way to tell. Furthermore, when I delete xMB of data from a LUN, and write new data into it, NTFS can write this data anywhere. That means that some previously freed blocks maybe re-used but it also means that blocks never used before can also be touched. The latter will trigger a physical allocation on the array.

1 comment:

You have brought forth some great points. Because physical storage cannot grow infinitely and transparently, thin provisioning carries a risk.

The question is how well this risk can be quantified, if at all, and then weighed against the cost benefits from saved costs.

I guess eventually OS and Apps have to start supporting thin provisioning, in terms of how they access the disk, and also in terms of instrumentation for monitoring and alerting.

Post a Comment