I was reading an article recently regarding Storage provisioning, the article was titled “The Right Way to Provision Storage”. What I took away from the article, as reader, is that storage provisioning is a painful, time consuming process involving several people representing different groups.

The process according to the article pretty much goes like this:

Step 1: DBA determines performance requirement and number of LUNs and defers to the Storage person

Step 2: Storage person creates the LUN(s) and defers to the Operations person

Step 3: Operations person maps the LUN(s) to some initiator(s) and defers to the Server Admin

Step 4: Server Admin discovers the LUN(s) and creates the Filesystem(s). Then he/she informs the DBA, probably 3-4 days later, that his/her LUN(s) are ready to host the application

I wonder how many requests per week these folks get for storage provisioning and how much of their time it consumes. I would guess, much more than they would like. An IT director of a very large and well know Financial institution, a couple of years ago, told me “We get over a 400 storage provisioning requests a week and it has become very difficult to satisfy them all in a timely manner”.

Why does storage provisioning have to be so painful? It seems to me that one would get more joy out of getting a root canal than asking for storage to be provisioned. Storage provisioning should be a straight forward process and the folks who own the data (Application Admins) should be directly involved in the process.

In fact, they should be the ones doing the provisioning directly from the host under the watchful eye of the Storage group who will control the process by putting the necessary controls in place at the storage layer restricting the amount of storage Application admins can provision and the operations they are allowed to perform. This would be self-service storage provisioning and data management.

Dave Hitz on his blog, a few months back, described the above process and used the ATM analogy as example.

NetApp’s SnapDrive for Unix (Solaris/AIX/HP-UX/Linux) is similar to an ATM. It lets data application admins manage and provision storage for the data they own. Thru deep integration with various Logical Volume Managers, filesystem specific alls, SnapDrive for Unix allows administrators to do the following with a single host command:

1) Create LUNs on the array

2) Map the LUNs to host initiators

3) Discover the LUNs on the host

4) Create Disk Groups/Volume Groups

5) Create Logical Volumes

6) Create Filesystems

7) Add LUNs to Disk Group

8) Resize Storage

9) Create and Manage Snapshots

10) Recover from Snapshots

11) Connect to filesystems in Snapshots and mount them onto the same or a different Host the original filesystem was or still is mounted

The whole process is fast and more importantly very efficient. Furthermore, it masks the complexity of the various UNIX Logical Volume Managers and allows folks who are not intimately familiar with them to successfully perform various storage related tasks.

Additionally, SnapDrive for Unix provides snapshot consistency by making calls to filesystem specific freeze/thaw mechanisms providing image consistenty and the ability to successfully recover from a Snapshot.

Taking this a step further, SnapDrive for Unix provides the necessary controls at the storage layer and allows Storage administrators to specify who has access to what. For example, an administrator can specify any or a combination of the following access methods.

◆ NONE − The host has no access to the storage system.

◆ CREATE SNAP − The host can create snapshots.

◆ SNAP USE − The host can delete and rename snapshots.

◆ SNAP ALL− The host can create, restore, delete, and rename

snapshots.

◆ STORAGE CREATE DELETE − The host can create, resize,

and delete storage.

◆ STORAGE USE − The host can connect and disconnect storage.

◆ STORAGE ALL − The host can create, delete, connect, and

disconnect storage.

◆ ALL ACCESS− The host has access to all the SnapDrive for

UNIX operations.

Furthermore, SnapDrive for Unix is tightly integrated with NetApp’s SnapManager for Oracle product on several Unix platforms which allows application admins to manage Oracle specific Datasets. Currently, SnapDrive for Unix supports Fibre Channel, iSCSI and NFS.

SnapDrive for Unix uses HTTP/HTTPs as a transport protocol with password encryption and makes calls to DataONTAP’s APIs for storage management related tasks.

There’s also a widely deployed Windows version of SnapDrive that integrates with Microsoft’s Logical Disk Manager/NTFS and VSS and allows admins to perform similar tasks. Furthermore, SnapDrive for Windows is tightly integrated with NetApp’s SnapManager for Exchange and SnapManager for SQL products that allow administrators to obtain instantaneous backups and near-instantaneous restores of their Exchange or SQL server database(s).

Below are a couple of examples from my lab server of using SnapDrive for Unix of what it takes to provision Storage on a Solaris host with Veritas Foundation Suite installed.

Example 1:

In this example I’m creating 2 LUNs of 2GB size each on controller named filer-a on volume named /vol/boot.

The LUNs are named lun1 and lun2. I then create a Veritas disk group named dg1. On that disk group I create a Veritas volume named testvol. On volume testvol, I then create a filesystem with /test as the mount point. By default and unless instructed otherwise via a nopersist option, SnapDrive will also make an entries into the Solaris /etc/vfstab file.

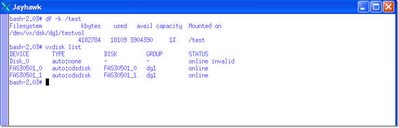

The following is what the filesystem looks like and Veritas sees immidiately after the above process has completed:

Example 2:

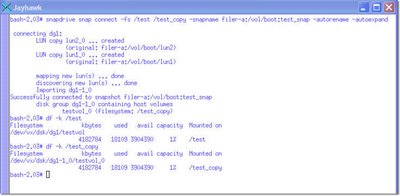

Below, I obtain a snapshot of the Veritas filesystem and name the snapshot test_snap. I then make an inquiry to the array to obtain a list of consistent snapshots for my /test filesystem.

This reveals that I have taken 3 different snapshots at different points in time and I can recover from anyone of them. I can also connect to any one of them and mount the filesystem.

Example 3

Here I'm connecting to the filesystem from the most recent snapshot, test_snap, and i'm mounting a space optimized clone of the original filesystem at the time the snapshot was taken. Ultimately, I will end up with 2 copies of the filesystem.

The original one, named /test, and the one from the snapshot which I will rename /test_copy. Both of the filesystems are mounted on the same Solaris server (they don't have to be) and are under Veritas Volume Manager control.

This is how simple and easy it is to provision and manage storage using NetApp's SnapDrive. Franky, it seems to be that a lengthy process explaining the "proper" way to provision storage adds extra layers of human intervention, uncessary complexity, it's inefficient and time consuming.

5 comments:

Hello Nick,

Sorry for unrelated comment, couldn't find email address on your blog for communication.

I am a storage blogger and attending the SNW in San Diego. I am contacting fellow storage bloggers to inquire about their plans for SNW. If you are attending SNW, will you be interested in attending a gathering of fellow storage bloggers? Any thoughts on any such gathering are most welcome.

I recently wrote a blog post on this topic http://andirog.blogspot.com/2007/04/say-hello-at-snw.html. I will appreciate any help in spreading the word to fellow bloggers and storage

professionals.

Thanks.

Anil

Hi Nick,

I just have the 3020 setup but run into error coredump while using snapdrive version 2.2 with Solaris 10, Veritas Volume Manager 4.1. I have to manually create the lun on the filer, map to host, discover on host, format, newfs, update vfstab to mount the lun. Let me know what wrong with my syntax. Thank you for your document.

Linh

#snapdrive storage create -fs /data01 -nolvm -lun filer01:/vol/data_EA/data01 -lunsize 1g -igroup host01

Segmentation Fault (coredump)

Hi Linh,

Storage creation of file systems is supported on disk groups that uses VERITAS volume manager with the VERITAS file system (VxFS).

This operation is not supported on raw LUNs; that is, the -nolvm option is not supported.

Since you are using VxVM you will need to put the LUN into a VxVM disk group, create a VxVM Volume and then create an fs on top of that.

Also, you should not be receiving the core dump. In fact the proper behavior is... it'll create the LUN(s), map it, discover it on the host. Then it should complain about filesystem creation and give you an error. Then it'll go back and delete the LUN(s) it originally created.

On Solaris 10, Snapdrive for Unix 2.2 is supported with Veritas Storage Foundation Suite 4.1MP1, and the Emulex Stack (HBA/Driver) right now.

Emulex or Qlogic cards using the Solaris Leadville stack are not supported yet, but its on its way shortly.

So make sure you're working with the right stack.

Let me know if you need anything else.

Nick

netapp2> fcp show initiator

Initiators connected on adapter 0c:

Portname Group

10:00:00:00:c9:51:d4:39 fcp_syc

10:00:00:00:c9:51:d4:38 fcp_syc

netapp2> iscsi initiator show

No initiators connected

By the othe hand, I have a Solaris SPARC machine running Solaris 10, Veritas Volume Manager 5, an Emulex LP11002 with the last drivers and firmware updated, SnapDrive for Solaris 2.2 When I intent to create and map a LUN into the Solaris machine, this is the error it sends:

bash-3.00# snapdrive storage create -fs /oratest -hostvol dgtest/testvol -lun netapp2:/vol/vol2/voltest -lunsize 5g

LUN netapp2:/vol/vol2/voltest ... created

mapping new lun(s) ... done

discovering new lun(s) ... *failed*

Cleaning up ...

- LUN netapp2:/vol/vol2/voltest ... deleted, failed to remove devices on host

Cleanup has failed.

Please delete LUN

netapp2:/vol/vol2/voltest

0001-411 Admin error: Could not find physical devices corresponding to LUNs "netapp2:/vol/vol2/voltest" in device list. Make sure filer WWPN is added to the HBA driver configuration file.

Logged into the filer, it sends this message:

netapp2>

Thu Jun 7 14:49:24 CDT [lun.map.unmap:info]: LUN /vol/vol2/voltest unmapped from initiator group v240_iscsi_SdIg

Thu Jun 7 14:49:24 CDT [wafl.inode.fill.disable:info]: fill reservation disabled for inode 100 (vol vol2).

Thu Jun 7 14:49:24 CDT [wafl.inode.overwrite.disable:info]: overwrite reservation disabled for inode 100 (vol vol2).

Thu Jun 7 14:49:24 CDT [lun.destroy:info]: LUN /vol/vol2/voltest destroyed

even when the iSCSI protocol is not running...

netapp2> iscsi status

iSCSI service is not running

I've tried to find the HBA configuration file, as message says, but didn't find any file. I even searched for that error message number 0001-411 with no luck.

So I created the LUN on the filerview for Solaris, with out mapping it to the igroup:

etapp2> lun show

/vol/vol2/voltest 5g (5368709120) (r/w, online)

netapp2>

On the solaris machine I issued the command

bash-3.00# snapdrive host connect -fs /oratest -hostvol dgtest/testvol -lun netapp2:/vol/vol2/voltest

Bus Error (core dumped)

bash-3.00#

Javier,

SnapDrive version 2.2 which is the most current version, does not support Storage Foundation 5.0 yet. That may or may not be your problem.

What you need is:

Solaris 10

Emulex HBAs (you have those)

Emulex Driver (lpfc)6.02h

SnapDrive 2.2

Veritas Foundation suite 4.1MP1

Netapp Host Utilities Kit for Solaris 3.0, 4.0 or 4.1

By default Solaris 10 ships with the SUN native emulex driver (emlxs) which is part of the SUN Leadville stack. This type of configuration is being worked on and will be supported in a new version of SnapDrive for Unix.

To get this working you will need to run with the Emulex driver (lpfc). Along with the emulex driver, emulex also provides a utility (FCA) to enable to unbind the emlxs driver and bind the lpfc one.

http://www.emulex.com/netapp/support/solaris.jsp

The hba configuration file the message is talking about is the lpfc.conf located in the /kernel/drv directory. If the emulex driver is installed then this file will exist.

Post a Comment