Various, next generation, 4Gb Fibre Channel components began rolling out around mid 2005 with moderate success rate, primarily because vendors were ahead of the adoption curve. A year later 4Gb FC has gained considerable momentum with almost every vendor having a 4Gb offering. With the available tools, infrastructure in place, backward compatibility, as well as, component availability near or at the same price points as 2Gb, 4Gb is a very well positioned technology.

The initial intention with 4Gb was for deployment inside the rack for connecting enclosures to controllers inside the array. However, initial deployments utilized 4Gb FC as Interswitch Links (ISL) in Edge to Core Fabrics or in topologies with considerably low traffic locality. For these types of environments 4Gb FC greatly increased performance, while at the same time decreasing ISL oversubscription ratios. Additionally, it decreased the number of trunks deployed which translates to lower switch port burn rates thus lowering the cost per port.

As metioned above, backwards compatibility is one of its advantages since 4Gb FC leverages the same 8B/10B encoding scheme as 1Gb/2Gb, speed negotiation, same cabling and SFPs. Incremental performance of 4Gb over 2Gb also allows for higher QoS for demanding applications and lower latency. Preserving existing investments in disk subsystems by being able to upgrade them to 4Gb thus avoiding fork-lift upgrades is an added bonus even though with some vendor offerings, fork-lift upgrades and subsequent data migrations will be necessary.

Even though most have 4Gb disk array offerings, no vendor that I know of offers 4Gb drives thus far, however I expect this to change. Inevitably, the question becomes "What good is a 4Gb FC front-end without 4Gb drives?"

With a 4Gb front-end you can still take advantage of cache (medical imaging, video rendering, data mining applications) and RAID parallelism provide excellent performance. There are some other benefits though, like higher fan-in ratios per Target Port thus lowering the number of switch ports needed. For servers and applications that deploy more than 2 HBAs, you have the ability to reduce the number of HBAs on the server, free server slots, and still get the same performance at a lower cost since the cost per 4Gb HBA is nearly identical with that of a 2Gb.

But what about disk drives? To date, there's one disk drive manufacturer with 4Gb drives on the market, Hitachi. Looking at the specs of a Hitachi

Ultrastar 15K147 4Gb drive versus a Seagate

ST3146854FC 2Gb drive, the interface speed is the major difference. Disk drive performance is primarily controlled by the Head Disk Assembly (HDA) via metrics such as avg. seek time, RPMs, transfer from media. Interface speed has little relevancy if there are no improvements in the above metrics. The bottom line is that, characterizing a disk drive as high performance strictly based on its interface speed can lead to the wrong conclusion.

Another thing to take into consideration, with regards to 4Gb drive adoption, is that most disk subsystem vendors source drives from multiple drive manufacturers in order to be able to provide the market with supply continuity. Mitigating against the risk of drive quality issues that could potentially occur with a particular drive manufacturer is another reason. I suspect that until we see 4Gb drive offerings from multiple disk drive vendors the current trend will continue

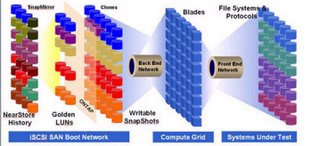

Completed, early 2006 the Kilo-Client project is, most likely, the World's Largest iSCSI SAN with 1,120 diskless blades booting of the SAN and providing support for various Operating Systems (Windows, Linux, Solaris) and multiple applications (Oracle, SAS, SAP etc). In addition, Kilo-Client, incorporates various Netapp technological innovations such as:

Completed, early 2006 the Kilo-Client project is, most likely, the World's Largest iSCSI SAN with 1,120 diskless blades booting of the SAN and providing support for various Operating Systems (Windows, Linux, Solaris) and multiple applications (Oracle, SAS, SAP etc). In addition, Kilo-Client, incorporates various Netapp technological innovations such as: