As surprising as it may seem, Storage Virtualization is not a new concept and has existed for years within disk subsystems as well as on the hosts. For example RAID represents an example of virtualization achieved within RAID arrays in that it reduces disk management and administration of multiple physical disks into few virtual ones . Host based Logical Volume Managers (LVM) represent another example of a virtualization engine that’s been around for years and accomplishes tasks similar.

The promise of storage virtualization is to cut costs by reducing complexity, enabling better and more efficient capacity utilization, masking the inherent interoperability issues caused by the loose interpretation of the existing standards, and finally by providing an efficient way to manage large quantities of storage from disparate storage vendors.

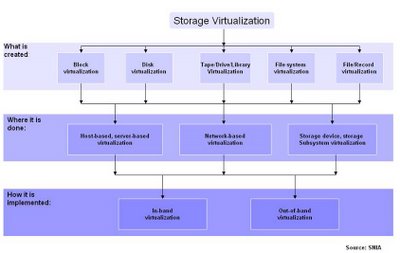

The logical abstraction layer can reside in servers, intelligent FC switches, appliances or in the disk subsystem itself. These methods are commonly referred to as: host based, array based, controller based, appliance based and switch based virtualization. Additionally, each one of these methods is implemented differently by the various storage vendors and are sub-divided into two categories: in-band and out-of-band virtualization. Just to make things even more confusing, yet another terminology has surfaced over the past year or so, the split-path vs shared-path architectures. It is of no surprise that customers are confused and have been reluctant to adopt virtualization despite the promise of the technology.

So lets look at the different virtualization approaches and how they compare and contrast.

Host Based – Logical Volume Manager

LVMs have been around for years via 3rd party SW (i.e Symantec) or as part of the Operating System (i.e HP-UX, AIX, Solaris, Linux). They provide tasks such as disk partitioning, RAID protection, and striping. Some of the them also provide Dynamic Multipathing drivers (i.e Symantec Volume Manager). As it is typical with any software implementation the burden of processing falls squarely on the shoulders of the CPU, however these days the impact is much less pronounce due to the powerful CPUs available in the market. The overall performance of an LVM is very dependent on how efficient the Operating System is or how well 3rd party volume managers have been integrated with the OS. While LVMs are relatively simple to install, configure and use, they are server resident software, meaning that for large environments multiple installation, configuration instances will need to be performed as well multiple and repetitive management tasks will need to be performed.. An advantage of a host based LVM is independent of the physical characteristics of external disk subsystems and even though these may have various performance characteristics and complexities, the LVM can still handle and partition LUNs from all of them.

Disk Array Based

Similar to LVMs, disk arrays have been providing virtualization for years by implementing various RAID techniques. Such as creating Logical Units (LUNs) that span multiple disks in RAID Groups or across RAID Groups by partitioning the array disks into chunks and then re-assemble them into LUs. All this work is done by the disk array controller which is tightly integrated with the rest of the array components and provides cache memory, cache mirroring as well as interfaces that satisfy a variety of protocols (i.e FC, CIFS, NFS, iSCSI). These types of Disk arrays virtualize their own disks and do not necessarily provide attachments for virtualizing 3rd party external storage arrays, thus Disk Array virtualization differs from Storage Controller virtualization.

Storage Controller Based

Storage controller virtualization is similar to Disk array based in that they perform the exact same function with the difference being that they have the ability to connect to, and virtualize various 3rd party external storage arrays. An example of this would the Netapp V-Series. From that perspective the Storage controller has the widest view of the fabric in that it represents a central consolidation point for various resources dispersed within the fabric. All this, while still providing multiple interfaces that also satisfy different requirements (i.e CIFS, NFS, iSCSI).

Appliance Based

Fabric based virtualization comes in several flavors. It can be implemented, out-band within an intelligent switching platform using switching blades. It can also be implemented in-band using an external Appliance or out of-band using an Appliance. In-band is used as a means to denote the position of the virtualization engine relative to the data flow. In-band appliances tend to split the fabric in two providing a Host view on one side of the Fabric and a storage view on the other side. To the storage arrays, the Appliance appears as an Initiator (Host) establishing sessions and directing traffic between the hosts and the disk array. In-Band virtualization appliances send information in the form of metadata with regards to the location of the data using the same path as the one used to transport the data. This is referred to as a “Shared Path” architecture. The opposite is called “Split Path”. The theory is that separating the paths provides higher performance, however, there is no real world evidence presented to date that validates this point.

An out-of-band Appliance implementation separates the data path (thru the switch) from the control path (thru the appliance) and requires agents on each host that will maintain the mappings generated by the appliance. While the data path to the disk is shorter in this scheme, the installation and maintenance of host agents does place a burden on the administrator in terms of maintenance, management and OS concurrency.

Switch Based

Switch based virtualization requires the deployment of intelligent blades or line cards, that occupy slots in FC director class switches. One advantage they have is that these blades are tightly integrated with the switch port blades. On the other hand they do occupy director slots. These blades run virtualization SW primarily on Linux or Windows Operating systems. The performance of this solution is strictly dependent upon the performance of the blade since in reality the blade is nothing more than a server. However, there are blade implementations that utilize specialized ASICs to cope with any performance issues.

Conclusion

The current confusion in the market is partially created by the many implementation strategies as well as by “clear-as-mud” white papers and marketing materials regarding the various implementation methods. Regardless which method you choose to implement, testing it in your labs is the only way to find out if the solution’s worth the price.