Booting from the SAN provides several advantages:

- Disaster Recovery - Boot images stored on disk arrays can be easily replicated to remote sites where standby servers of the same HW type can boot quickly, minimizing the negative effect a disaster can have to the business.

- Snapshots - Boot images in shapshots can be quickly reverted back to a point-in-time, saving time and money in rebuilding a server from scratch.

- Quick deployment of Servers - Master Boot images stored on disk arrays can be easily cloned using Netapp's FlexClone capabilities providing rapid deployment of additional physical servers.

- Centralized Management - Because the Master image is located in the SAN, upgrades and patches are managed centrally and are installed only on the master boot image which can be then cloned and mapped to the various servers. No more multiple upgrades or patch installs.

- Greater Storage consolidation - Because the boot image resides in the SAN, there is no need to purchase internal drives.

- Greater Protection - Disk arrays provide greater data protection, availability and resiliency features than servers. For example, Netapp's RAID-DP functionality provides additional protection in the event of a Dual drive failure. RAID-DP with SyncMirror, also protects against disk drive enclosure failure, Loop failure, cable failure, back-end HBA failure or any 4 concurrent drive failures

Having mentioned the advantages, it's only fair that we also mention the disadvantages which even though are being outnumbered they still exist:

- Complexity - SAN Booting is a more complex process than booting from an internal drive. In certain cases, the troubleshooting process may be a bit more difficult especially if a coredump file can not be obtained.

- Variable Requirements - The requirements and support from array vendor to array vendor will vary and specific configurations may not even be supported. The requirements will also vary based on the type of OS that is being loaded. Always consult with the disk array vendor before you decide to boot from the fabric.

One of the most popular platforms that lends itself to booting from the SAN is VMware ESX server 3.0.0. One reason is that VMware does not support booting from internal IDE or SATA drives. The second reason is that more and more enterprises have started to deploy ESX 3.0.0 on diskless blade servers consolidating hundreds of physical servers into few blades in a single blade chassis with the deployment of VMware's server virtualization capabilities.

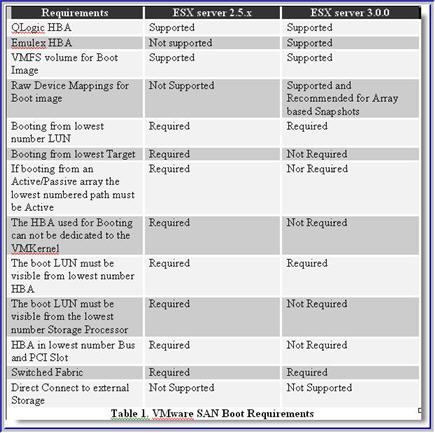

The new ESX 3.0.0 release has made significant advancements in supporting boot from the SAN as the multiple and annoying requirements from the previous release have been addressed.

Here are some differences between the 2.5.x and 3.0.0 versions with regards to the SAN booting requirements:

If you are going to be booting ESX server from the SAN, I highly recommend that prior to making any HBA purchasing decisions, you contact your storage vendor and carefully review VMware's SAN Compatibility Guide for ESX server 3.0 . What you will find is that certain model Emulex and Qlogic HBAs are not supported for SAN booting as well as certain OEM'd/rebranded versions of Qlogic HBAs.

The setup process is rather trivial, however there are some things you will need to be aware of in order to achieve higher performance, and non-disruptive failovers should HW failures occur:

1) Enable the BIOS on only 1 HBA. You only need to enable the BIOS on the 2nd HBA should you have a need to reboot the server while either the original HBA used for booting purposes, the cable or the FC switch has failed. In this scenario, you would use Qlogic's Fast!UTIL to select the Active HBA, enable the BIOS, scan the BUS to discover the boot LUN, and assign the WWPN and LUN ID to the active HBA. However, when both HBA connections are functional only one needs to have its BIOS enabled.

2) One important option that needs to be modified is the Execution Throttle/Queue Depth which signifies the maximum number of Outstanding commands that can execute on anyone HBA port. The default for ESX 3.0.0 is 32. The value you use is dependent on a couple of factors:

- Total Number of LUNs exposed thru the Array Target Port(s)

- Array Target Port Queue Depth

The formula to determine the value is: Queue Depth = Target Queue Depth / Total number of LUNs mapped. This formula will guarantee that a fast load on every LUN will not flood the Target Port resulting in QFULL conditions. For example, if a Target Port has a queue depth of 1024 and 64 LUNs are exposed thru that port then the Queue Depth on each host should be set to 16. This is the safest approach and guarantees no QFULL conditions because 16 LUNs x 64 = Target Port Queue Depth

If using the same formula, you only consider LUNs mapped to one Host at a time then the potential for QFULL conditions exists. Using the above example, lets assume that we have a total of 64 LUNs and 4 ESX hosts each of which has 16 LUN mapped.

Then the calculation becomes: Queue Depth = 1024 / 16 = 64. But a fast load on all 64 LUNs produces: 64 x 64 = 4096 which is much greater than Queue Depth of the Physical Array Target Port. This will most certainly generate a QFULL condition.

As a rule of thumb, after the queue depth calculation, always allow some room for future expansion, in case more LUNs need to be created and mapped. Thus, consider setting the queue depth value a bit lower than the calculated one. How low is strictly dependent on future growth and requirements. As an alternative you could use Netapp's Dynamic Queue Depth Management solution which allows queue depth management from the array side rather than the host.

To Change the Queue Depth on a Qlogic HBA:

2a) Create a copy /etc/vmware/esx.conf

2b) Locate the following entry for each HBA:

/device/002:02.0/name = "QLogic Corp QLA231x/2340 (rev 02)"

/device/002:02.0/options = ""

2c) Modify as following:

/device/002:02.0/name = "QLogic Corp QLA231x/2340 (rev 02)"

/device/002:02.0/options = "ql2xmaxqdepth= xxx"

2d) Reboot

Where xxx is the queue depth value.

3) Another important option that will need modification using Fast!UTIL is

3a) Create a copy of /etc/vmware/esx.conf

3b) Locate the following entry for each HBA:

/device/002:02.0/name = "QLogic Corp QLA231x/2340 (rev 02)"

/device/002:02.0/options = ""

3c) Modify as following:

/device/002:02.0/name = "QLogic Corp QLA231x/2340 (rev 02)"

/device/002:02.0/options = "qlport_down_retry=

3d) Reboot

Where xxx is the value recommended by your storage vendor. The equivalent setting for Emulex HBAs is "lpfc_nodedev_tmo". The default is 30".

In closing, before you decide what your setup will be, you will need to decide whether or not booting from the SAN makes sense for you and whether your storage vendor supports the configuration(s) you have in mind. In general, if you do not want to independently manage large server farms with internal drives, if you are deploying diskless blades or if you would like to take advantage of Disk array based snapshots and cloning techniques for rapid recovery and deployement then you are a candidate for SAN booting.

12 comments:

Booting VMWare from a SAN has pros and cons, but something should be said to anyone considering it- it takes 15 minutes to provision VMWare ESX from bare metal with an install CD. You have to add time for configuration, however you don't need to mess around with BIOS and adapter firmware levels and ensure they're supported by VMWare.

So I agree with you, there are pros and cons about SAN booting in general not just about ESX. However, the argiment one can make is this...

I'm already booting all the VMs from a SAN why not the ESX server?

In fact, I'd claim that with VM mobility, being able to snap, migrate, replicate and archive, booting the ESX might offer more pros than cons.

I'd claim that with a snapshot image of ESX, the 15' provisioning become no more than 3-4 minutes. In fact, it'll take longer for the box to boot up than it takes to map a lun clone.

Nick,

To best of your knowledge, does with ESX boot on SAN supports multipath?

In my case, I have HP Blades BL20p G3, with Dual HBA Qlogic 2312 and few NetApp 3020's.

I'm able to use boot on san, but curious if we can configure MPIO for boot on san. I had an instance of FC switch failure caused by human element, however, the secondary path did not kick in and ESX miserably crashed.

Looking forward to your response,

Thanks

ServerChief.com

Serverchief, ESX fully supports SAN boot with multipathing. I got 2 boxes set like this in the lab.

The issue most likely is around the HBA driver timeouts. If these are not set appropriately, then I'd expect the server(s) to crash.

Netapp has a Host Utilities kit for ESX which installs on the at the console. It's comprised of a whole bunch of scripts with several functions. One of the scripts is called " config_hba" and sets all the timeout parameters either of QLA or emulex cards.

The utilities are installed on /opt/netapp/santools/bin. Check to see if they are inetalled. If they are, run:

# hba_config --query

The above will return the driver version used and the qlport_down_retry value. The value should be 60. If it's not, then you'll need to set it:

# hba_config --configure

It does require a host reboot to take effect.

If you don't have the host utilities attach then you need to set it using the esxcfg-module command but first you need to know what the current value is set to.

# esxcfg-module -q

To set it:

# esxcfg-module -s qlport_down_retry=60 qla2300_707

reboot the host

Nick,

Thanks for your response, i'm about to try what you mentioned above.

Can the same be done on Linux RHEL4u3? I was told its RHEL4 limitations and I should upgrade to RHEL5, curios if you got it to work.

I have a painful time getting this to work. I read a netapp manual on "FCP Host Utils 3" and tried everything to the letter, but with no success.

Any help is greatly appreciated.

Regards

ServerChief

RHEL 4 with the native multipathing (DM-MP) does not support SAN booting. It fails miserably. Can not run on root or boot device.

I too was under the impression that RHEL 5 would support this but I have't tried it, yet.

Having said that, there is a way you can SAN boot and be in a multipathing configuration by using Qlogic's failover driver.

I must warn you that while this method works from a multipathing perspective, we've decided not to support SAN boot on linux with it because it's quirky when it comes to device discovery and initialy OS installation.

The 1st quirk is that while you're installing the OS, you can not have more than 1 path to the LUN as the anaconda installer gets quite upset.

The 2nd quirk has to do with new device discovery after you've booted. While qlogic provides a script that scans for new LUN(s) without having to unload/reload the fc driver, this does not always work. That means the only sure way to discover the new device is the by unloading/reloading the driver...but here's the catch22...You can't unload the fc driver because you are aleady using to san boot. That leaves you with one alternative...reboot.

Nick,

You can scan for new devices using qlogic script that comes with netapp host utilities.

I have not had any issues scanning and finding new/same luns. Primarily, i install the system via one path, than re-install qlogic drivers. Enable the second path on the FC switch and re-scan. I then see the second path.

My issue is enabling DM-MP when using both path after install.

When system boots up, first loading initrd, right after that, linux complains about duplicate path and goes insane, locking the lun in read-only mode. Once lun goes into read only, its unable to write and after few complaints, system halts.

Would you know what to do for the system to mark one path as active and second passive path and not lock up the lun?

I read alot of posts, but none seems to talk about my configuration with Qlogic, NetApp and RHEL.

Thanks for your support,

Regards

ServerChief

Serverchief,

By default the Qlogic driver version 8.x has ql2xfailover=1 in /etc/modprobe.conf which allows for multipathing of all LUNs (boot included). This is Active/Passive on a per LUN basis but you can balance the total number LUNs across the HBAs.

It seems to me (correct my assumption) that you are trying to run with both QLA failover driver and DM-MP the latter of which does not supprot boot LUNs in any mode (active/active or active/passive).

I suggest, you part ways with DM-MP since you're booting from the fabric and use only the QLA failover driver. Then use SANsurfer to manage the paths.

Nick,

Now you caught me by suprise, NetApp host utils 3 specifically mentions the use DM-MP in order for multipath to work with Qlogic, that is why i'm stock here.

I will try like you said, just Qlogic alone with SANSurfer.

I'll keep you posted.

Thanks again,

ServerChief

Serverchief,

DM-MP is definately recommended for those environments that *do not* boot from SAN.

Your environment does not fall in that category.

The way to get SAN boot working with linux with multipathing would be to deploy the Qlogic failover driver. This works and even though it's not on the support matrix officially for RHEL 4, there are Netapp environments that have filled PVRs to get support for this and have been approved for both RHEL 3 and RHEL 4.

So, my recommendation would be to get your account NetApp SE or partner SE to file a PVR for your config. It will get approved.

Your BFS multi-pathing comment was very interesting, and I logged on to NOW to grab the binaries and check it out in the lab.

But the release notes reference NetApp bug 239978 which recommends NOT booting from SAN on iSCSI.

Also I can't find any NetApp docs referencing the multipathing config for BFS. Our NetApp guy is not convinced.

Can you point me to any docs?

FC and HW iSCSI san boot with ESX 3.0.1 and above is problematic in that if you lose the active path the boot lun sits on you will lose connectivity to the console.

this problem is fixed with 3.5U2 and the latest patch bundle by VMware.

Post a Comment